Our cluster is comprised of 8 rack-mountable boxes, each one being a Dell SC1425. The specification for this model is a 3.0 GHz Hyperthreaded Xeon CPU, 512 MB RAM, dual Gigabit NICs and support for remote management protocols such IPMI (Intelligent Platform Management Interface) and SoL (Serial over Lan).

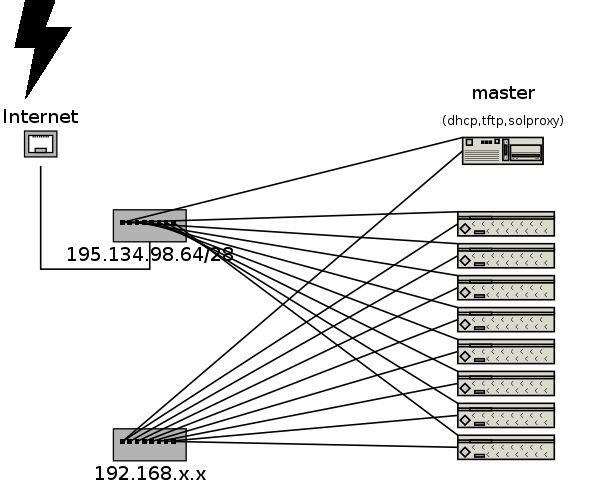

However the topology of our network setup is a little complicated as from the beginning the main requirement has been the potential for completely remote management including hard reboots, software and even Operating System installations and serial console access. As a result it has been decided to use another box, the so called master, to provide necessary services such as DHCP, TFTP, solproxy.

Serial console was the only choice for us to be able to manage the cluster, even if the operating system hasn't booted, and with these machines offering "BIOS console redirection" it seemed like a really good choice. Fortunately the serial port is not the only way to get serial console and one can get it through LAN and a proprietary protocol, SoL (Serial over Lan), which means that you can easily access each box's console given its IP address. Considering that this is a bit insecure we decided to dedicate one of the two NICs to SoL access, interconnect them through an isolated hub and use internal IP addresses (192.168.x.x) for this purpose. The second NIC is used from the operating system to provide internet access and the interconnection of them takes place through a completely seperated hub, uplinked to the Internet. The only way for someone to access the serial console is to log in to master and use the (proprietary) solproxy program passing as parameter the internal IP address of the box he wants to access. Moreover, by logging in to master and using the internal IP address of a box, one can perform other management work like powering on and off the cluster or measuring temperatures. These and many more jobs are performed via IPMI and the open-source ipmitool.

The next thing to be solved was how to achieve completely remote operating system installation without the need to visit the computer room and load installation CDs. The solution to this is the use of PXEboot, or in other words booting via LAN. Right after a machine is powered up you choose from the BIOS setup to boot via PXE. The appropriate interface then gets assigned an IP address from the DCHP server on master and requests a particular file, the PXE bootloader (usually pxelinux.0). The bootloader is transfered via TFTP, as is the kernel and initrd you choose to boot. Notice that it is important to pass to the kernel the parameter console=ttyS0,9600 since you don't have any other kind of access to the machine. After that the installation proceeds as usual, at least for Scientific Linux that we used.